How Does ChatGPT Work?

Max T

Nov 3, 2024

9 min read

From creating content to solving complex problems, ChatGPT seems to have a skill for just about everything. And just when we think we know what it can do, it surprises us with new sets of capabilities that keep the excitement alive. Every day, we’re learning more about the incredible potential it holds.

But how does ChatGPT pull off these impressive feats? How exactly does it work?

To understand the magic behind ChatGPT, let’s dive into the technology, training, and design that make this AI chatbot such a powerful tool.

How Was ChatGPT Built?

To understand how ChatGPT was built, we first need to look at its origins and the powerful technology that drives it.

As impressive as ChatGPT is, it’s not magic. Like every remarkable piece of software, ChatGPT is the result of human ingenuity and relentless innovation. This AI chatbot didn’t emerge out of nowhere—it’s a carefully engineered tool, designed to make advanced language understanding accessible to everyone.

ChatGPT was built by OpenAI, an AI research company that has been pushing the boundaries of artificial intelligence. OpenAI has developed other influential tools, such as DALL-E, which generates unique images, and Codex, which assists with coding tasks—ChatGPT is one of their most influential projects yet.

At the heart of ChatGPT is the GPT technology. GPT stands for "Generative Pre-trained Transformer," and it's what gives ChatGPT the ability to understand and generate human-like responses.

When you visit the ChatGPT website on platforms like chat.openai.com, you’re interacting with what feels like an intelligent, conversational assistant. But here’s an interesting point: ChatGPT itself—the interface you see on the screen—isn’t the actual “model.”

It’s a user-friendly interface that allows you to interact with the real intelligence behind the scenes: the underlying GPT models. In other words, the ChatGPT interface acts as the “face” of the operation, providing an accessible way for you to interact with the powerful language models that run the show.

By building on top of GPT technology, ChatGPT is able to communicate, assist, and even entertain with a level of fluency that has made it an internet sensation. To learn how this fluency is achieved, consider taking a ChatGPT course that explores its technical and practical uses.

But before we push further, let’s talk about GPT. What exactly is it and how does it fit into the whole ChatGPT ecosystem ?

What is GPT, Anyway?

We know that ChatGPT is built on GPT technology. In fact, GPT is the backbone of ChatGPT—it’s the model that makes ChatGPT what it is. It’s what gives it the ability to understand questions, generate responses, and feel conversational. But what exactly is GPT?

Let’s break it down, starting with those three letters: Generative Pre-trained Transformer. Each part of that name describes something fundamental about how ChatGPT works.

Generative: The “generative” part means that ChatGPT can create things. Specifically, it has the ability to generate natural language text. So, when you ask ChatGPT a question or give it a prompt, it can respond by creating a unique, relevant answer rather than just picking from pre-written responses. This ability to “generate” text makes ChatGPT flexible and responsive, giving it the power to answer questions, tell stories, or even offer advice—all by creating new text on the spot.

Pre-trained: The “pre-trained” part refers to how ChatGPT learns. Imagine studying for a quiz in school; you need to go through all the material beforehand. In a similar way, ChatGPT has been “pre-trained” on a large amount of text data before it ever interacts with users. This pre-training process gives ChatGPT its foundational knowledge on a wide range of topics. So, when you ask ChatGPT a question, it can draw on this knowledge to provide answers because it’s already been trained on relevant information.

Transformer: Finally, we have the “transformer.” This is the underlying machine learning architecture that powers GPT. Without getting too technical, a transformer is a kind of model that processes information in layers, analyzing patterns and connections in the data. This architecture allows ChatGPT to understand the context of words in a sentence, making its responses coherent and contextually accurate.

Putting it all together, Generative Pre-trained Transformer (GPT) is a language model trained on a large dataset with the goal of generating text that resembles human language. It can respond to prompts by creating answers, explanations, or solutions in a way that feels natural and conversational.

I know you’ve probably heard about different GPT models, like GPT-3.5 Turbo, GPT-4, GPT-4o Mini, and other versions of GPT.

To make sense of this, it’s helpful to understand that all of these are still fundamentally “GPT” models.

They all share the same operational principles underneath. What differentiates each model is that each new version typically brings some improvement or refinement over the previous ones. They’re generational, each one building upon and advancing the previous.

Think of it like going through grades in school. GPT-1 would be like grade one, where the basics are learned. As the models advance—GPT-2, GPT-3, and so on—they’re like moving up to higher grades. At each level, the model “learns” a little more, gaining new capabilities, and improving in accuracy, flexibility, and understanding.

For example, a student in grade six might learn more complex concepts than they did in grade one. They’ll still retain everything they learned in the earlier grades, but now they’ll have a wider base of knowledge and more tools to solve problems.

By the time they reach grade seven, they’re not just reviewing what they learned in grade six, but they’re also adding new skills and knowledge. The same principle applies to GPT models. Each generation, like GPT-3.5, for example, doesn’t abandon what GPT-3 was capable of but builds on it with better understanding, more training data, and enhanced algorithms.

So, when you hear terms like “GPT-3.5 Turbo” or “GPT-4,” think of them as different grades. They’ve all been through similar foundational training, but each one has advanced further in knowledge and complexity.

While they may all fundamentally function in the same way, newer models are designed to handle questions with more nuance, generate responses more accurately, and even solve issues the earlier models may have struggled with. And each model, in turn, has its own strengths and may bring unique benefits, depending on the task.

So, we know what GPT is, and we know it has been trained. But that raises a new question: How was ChatGPT actually trained? What goes into teaching an AI model like GPT?

How Was ChatGPT Trained?

Training a GPT model is an extensive process. The base models, like GPT-4 or GPT-3, are trained on vast datasets containing billions of words from books, websites, and various sources of text.

This data is used to teach the model language patterns, grammar, facts, reasoning, and a wide range of general knowledge. During training, the model learns to predict the next word in a sentence, developing an understanding of context and meaning over time.

To really understand how ChatGPT was trained, let’s break down the two core methods: supervised learning and reinforcement learning. Both are essential parts of the training process, but they work in different ways.

First, let’s talk about supervised learning.

In supervised learning, the model is trained by being shown a large set of examples where both the input and the expected output are provided. Think of it like a teacher giving you questions along with the answers.

During supervised training, the model is fed sentences or prompts, and human trainers provide ideal responses. The model then learns from these examples, adjusting its internal parameters to improve its ability to produce similar, high-quality responses on its own.

For ChatGPT, this might mean showing the model questions or conversational prompts and teaching it the kind of response it should give.

For example, the model might be shown a question like, “What is the capital of Egypt?” with the answer “Cairo.” By seeing lots of these question-answer pairs, the model learns patterns in language and develops a baseline understanding of how to respond to various prompts.

In short, supervised learning helps the model get a strong foundation in language and gives it a good idea of how to answer different types of questions accurately.

But while this process is helpful, it only gets the model so far—it needs another layer of training to really fine-tune its responses to align with human preferences and expectations. That’s where reinforcement learning comes in.

Now, let’s move to reinforcement learning.

After the supervised learning phase, ChatGPT undergoes further training through a process called Reinforcement Learning from Human Feedback (RLHF). This method is more interactive and allows the model to “learn” from its mistakes and successes in a way similar to how people learn from feedback.

Here’s how it works.

The model generates responses to a wide range of prompts, and human trainers rank those responses from best to worst. For instance, the model might generate several answers to a question like “What is a Worm Hole.”

Human trainers review the answers, ranking them based on factors like clarity, accuracy, and helpfulness. This ranking system helps guide the model by rewarding responses that align more closely with human preferences and penalizing ones that fall short.

Through this feedback, the model learns to prioritize responses that are more likely to be useful, friendly, and conversational. In a sense, reinforcement learning helps the model “adapt” and become more responsive to what people actually want when they ask it questions or engage in conversation.

So, while this is a very simplified view of how ChatGPT is trained, the key thing to remember is the role both training approaches play in making ChatGPT the powerhouse that it is.

Supervised learning gives the model a solid baseline by teaching it language and general knowledge, while reinforcement learning refines that baseline, pushing the model toward responses that align better with human preferences.

Together, these two training methods allow ChatGPT to be both knowledgeable and engaging, able to answer questions accurately while also responding in a way that feels natural and human-like.

What Kind of Information Does ChatGPT Actually Learn From?

Now that we’ve covered the training methods, let’s talk about the actual data ChatGPT learns from. Training a model like GPT requires a vast and diverse set of text data, which helps it learn about language, concepts, facts, and conversational cues.

But what kinds of sources go into this data? And, importantly, what doesn’t ChatGPT learn from?

To be able to handle a range of tasks, ChatGPT’s training involves a vast dataset made up of an array of sources, including books, websites, scientific articles, blogs, and forums. This diversity is what makes ChatGPT capable of answering both technical questions and casual inquiries, and it’s also why it can vary its tone based on the topic or prompt.

The idea is to open up ChatGPT to the full spectrum of human language and ideas, helping it mimic conversational flows that feel natural and relevant to users.

While ChatGPT is trained on a massive volume of text, it’s worth noting that its knowledge is purely derived from language patterns. It doesn’t actually “understand” or “experience” the real world as we do.

This lack of direct experience means ChatGPT can only offer knowledge that’s present within its training data. Moreover, ChatGPT’s “knowledge cutoff”—the date beyond which it hasn’t been trained—limits its awareness of recent developments. So, if its training ended in, say, 2023, it wouldn’t know anything that happened after that year.

Also, ChatGPT training typically excludes private or proprietary information. Its responses come solely from a publicly accessible knowledge base, without drawing on real-time or sensitive data sources.

This curated selection helps ensure that ChatGPT remains reliable, offering responses that are based on general knowledge rather than private, confidential, or real-time information.

In short, while ChatGPT’s training data enables it to be a flexible and wide-ranging conversational partner, it also has deliberate constraints. These limitations are key to balancing its usability and safety, so it can respond to a broad spectrum of questions while respecting privacy boundaries and maintaining relevance across general knowledge topics.

Extend ChatGPT Training With Your Own Data

We’ve taken a journey through the ways ChatGPT was trained, learning how it developed into a versatile tool that can answer questions across a wide range of fields.

This training is what enables ChatGPT to assist you with questions on health, solve mathematical problems, provide business insights, and much more.

ChatGPT’s ability to tap into vast, diverse knowledge makes it a powerful asset, allowing it to assist in an impressive array of subjects.

However, despite all of its strengths, ChatGPT does have its limitations. While it can answer questions on many topics, it’s still bound by the general information it was trained on and doesn’t automatically know about specialized or niche subjects, like the specifics of your business or your unique use case.

This “blind spot” is natural for any AI trained on broad data; ChatGPT can only go so far in understanding the unique nuances of your specific needs.

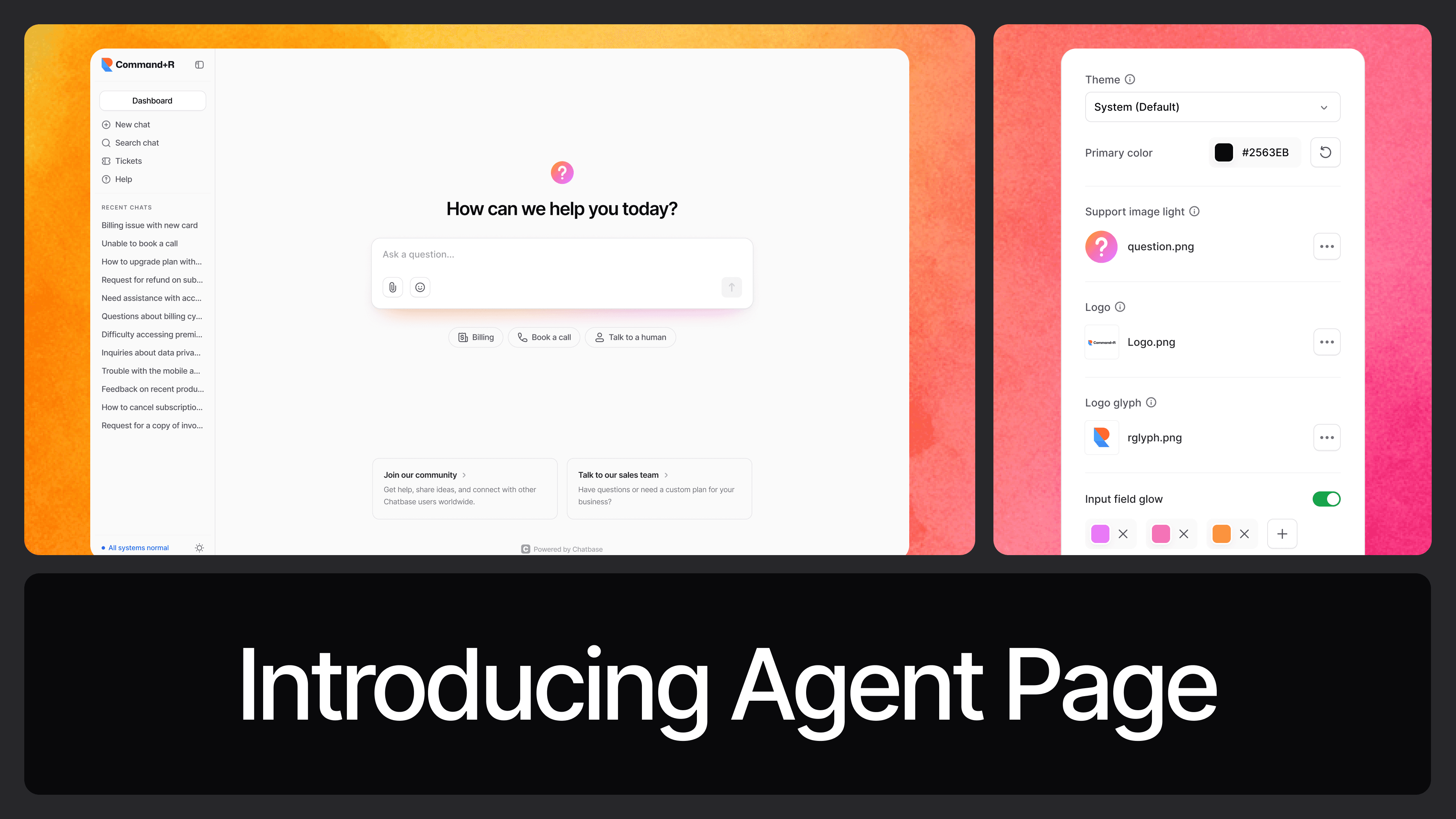

That’s where tools like Chatbase can be incredibly useful. Chatbase allows you to extend ChatGPT’s training, customizing it with specialized knowledge specific to your business or unique use case.

Whether you want ChatGPT to serve as a customer support chatbot trained to understand your products or a knowledge base for your team with tailored responses, Chatbase lets you build a highly focused AI that knows your business inside out.

With Chatbase, you can even create distinct personalities and tailored interactions, allowing ChatGPT to respond in ways that feel true to your brand and purpose.

Imagine embedding a chatbot on your website that can guide visitors with in-depth knowledge of your services or developing an internal tool that acts as a go-to resource for your employees. The ability to further train ChatGPT unlocks countless possibilities to deploy it in innovative ways that go far beyond what it could offer out of the box.

If you’re ready to explore how ChatGPT can become a valuable, tailored tool for your unique needs, try Chatbase.

Chatbase enables you to train ChatGPT to understand your specific context, whether you’re looking to embed a chatbot on your website, create a customized customer support assistant, or even develop an API-accessible, fine-tuned AI for unique business applications.

With Chatbase, developers, business owners, and innovators can extend ChatGPT's capabilities and ensure it has the knowledge needed to excel in specific tasks and roles.

So why settle for generic answers when you can train an AI to speak directly to your audience, understand your business, and elevate the customer experience?

Discover what Chatbase can do for your business today—sign up and start crafting your own specialized AI.

Share this article: